C is for Cookie Monster, D for Dessert!

„Come on, get ready, come on, get set. It's time for the Sesame Street alphabet“

Introducing the alphabet song with these lines, Elmo has lured generations of children into the world of writings and helped them to live up to their full potential of human intelligence. However, like every classic he and his friends from sesame street don’t get outdated and seem to play a similar role also in modern times - the only difference being that now it’s artificial intelligence which they advance in it’s ability to read and write and speak.

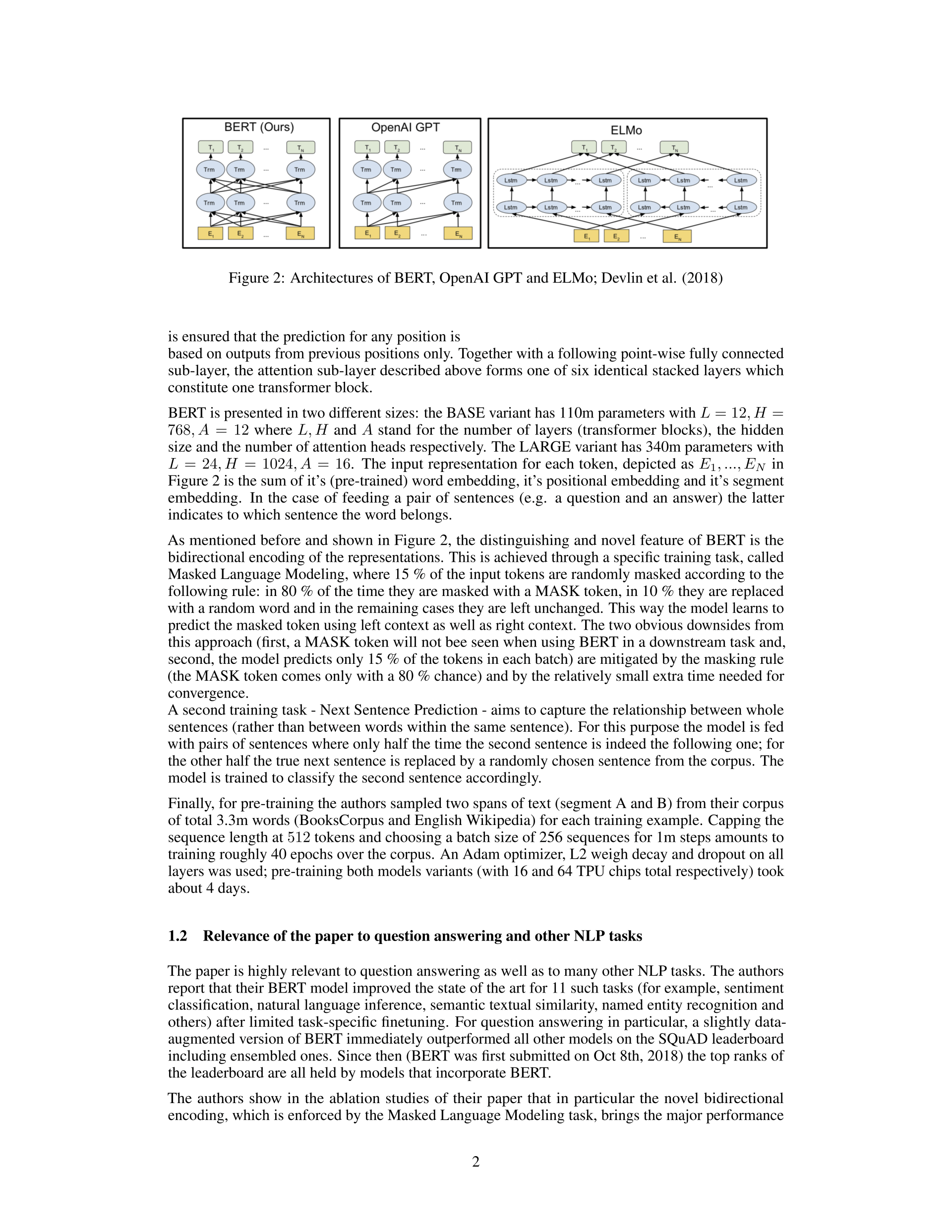

ELMo - short for: „Embeddings from Language Models“ - as introduced by Peters et al. in 2017 was the first of now several pre-trained language models which have entered the stage and immediately taken a central role in the world of natural language processing (NLP). They can be considered as the grown-up siblings of the pre-trained word vectors which were proposed in the seminal paper by Mikolov et al. only four years ago and are classified as the most important contribution to NLP over the last decade by many researchers in the field. What struck them at the time was the possibility to perform simple vector operations with these embeddings and achieve meaningful results. For example: adding and subtracting vector representations for the respective words yields correct answers for questions like: „man is to woman what king is to ?“. Or : „Paris is to France what Berlin is to ?“.

However, while capturing a fair share of the syntax and the semantics of a word, these embeddings had difficulties to cope with more subtle dimensions like polysemy, i.e. different meanings in different contexts for the same word. This is the key issue which the pre-trained language models address: they assign deep contextualized word representations to each token (that is: an indexed vector for each word in the defined vocabulary) which are a function of the entire input sentence. To get these representations a bidirectional LSTM (in the case of ELMo) or a model with several stacked transformer layers (as for BERT, another variant of a pre-trained language model by Devlin et al., 2018) is trained on huge corpora of text. BERT for example, which is the acronym for „Bidirectional Encoder Representations from Transformers“, uses BooksCorpus and English Wikipedia which together provide more than 3,2 billion words for pre-training.

The real beauty of these models, however, lies not in their technical sophistication or the sheer size of datasets they use for training, but in the ease with which they can be used for all sorts of NLP tasks. BERT in particular has taken the scene by storm and with simple fine-tuning advanced the state of the art for eleven recognized NLP tasks right from the start. On the leaderboard of the Stanford Question Answering Dataset (SQuAD) which is the most prominent challenge to asses the reading comprehension of an algorithm, all top ranking entries now incorporate BERT as a building block.

So if you want your machine to understand, produce and work with text you now should go back to early days and say hello again to your friends from sesame street. Take BERT as a starter, add a few layers for your desired downstream task as fine-tuning, and then have your model sing along with the cookie monster: „C is for Cookie Monster, D for desert“!

For a more technical summary of BERT continue reading below.

References:

Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S Corrado, Jeff Dean (2013), Distributed representations of words and phrases and their compositionality, In Advances in Neural Information Processing Systems 26, pages 3111–3119. Curran Associates, Inc.

Matthew E. Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, Luke Zettlemoyer (2017), Deep contextualized word representations, arXiv:1802.05365v2

Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova (2018), BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, arXiv:1810.04805.